We’re getting closer to the end, presumably, of the most static design era in the iPhone’s history. While Apple continues to iterate on the specs of its most important product, the iPhone 16 product line is visually part of a generation that dates back four years to the iPhone 12.

On the one hand, it’s a fact that reveals just how the pace of smartphone design innovation has slowed. This is the longest time Apple has been stuck on a design generation in the history of the iPhone. On the other hand, Apple largely stopped redesigning its laptops in 2010, and nobody seems to mind too much. There’s something there about a device slowly iterating toward its ideal form, and I do wonder if Apple thinks that the iPhone 5-esque stylings of the iPhone 12/13/14/15/16 are the equivalent of the classic MacBook Air/MacBook Pro design.

The problem is this: History suggests that every time Apple does a physical redesign of the iPhone, there’s a surge in iPhone sales. That’s quite a motivator, given the iPhone’s place as the generator of more than half of Apple’s corporate revenue.

So what do you do if you’re Apple? You (again, presumably) try to work on new technological breakthroughs that will let you change things up more dramatically—the introduction of Face ID in the iPhone X let the home button go away, for instance—while you keep pushing forward with the specs and internals in the meantime. It’s not exciting, but neither is it a moral failing.

I’d sure rather be using an iPhone 16 Pro than an iPhone 12 Pro, because the newer model is better in almost every way. That’s what years of iteration will do. Since most iPhone buyers do not upgrade once a year, those individual iterations only really matter in the cumulative sense. So before I dig into this year’s collections of choices and iterations, it’s worth considering the grand sweep of time (iPhone Pro edition):

- iPhone 13 Pro: ProMotion, 3x telephoto lens, chip improvements

- iPhone 14 Pro: Dynamic Island, Crash Detection, SOS via satellite, 48MP camera, always-on display, brighter display, Action Mode, chip improvements

- iPhone 15 Pro: Action Button, USB-C port, 24MP fusion image, chip improvements, titanium exterior, and (in hindsight) Apple Intelligence support

This year, that list grows to include larger displays, a new 48MP camera, 4K120 video support, 48MP ultrawide camera, new Photographic Styles workflow, surround audio, 5× telephoto lens (which arrived last year on the Pro Max model only), and (of course) chip improvements courtesy of the new A18 Pro chip.

Would it be fun if Apple unveiled an entirely new, never-before-seen iPhone design? Yes, it would. Maybe next year. But the company needs to sell phones in the meantime, and as ever, it has taken the last year to add a bunch of improvements that will provide a solid set of upgraded features that, when added to those from previous years, make for a solid update.

There are four models

While this review is primarily focused on the iPhone 16 Pro, I’ve had a chance to use all four new iPhone models. The iPhone 16 Pro Max is really only different from the Pro model in that it’s larger (and has commensurately greater battery life); the iPhone 16 and iPhone 16 Plus are lacking some pro features, but in many ways the gaps between the Pro and non-Pro models are the smallest they’ve been in a while.

Let’s start with the single best feature of the iPhone 16 and 16 Plus, and the one in which those phones utterly blow away their more expensive Pro counterparts: color. Apple’s behavior strongly suggests that it’s just not interested in offering striking color options on high-end iPhones, and its choices of colors on the lower-end models are often muted and questionable. Not so this year, because the iPhone 16 and 16 Plus come in three delightful colors (in addition to more staid white and black models): Ultramarine, Teal, and Pink.

I’m colorblind and can’t see pink very well, and even I appreciate this beautiful pink. The teal is the quintessential blue-green tone that I’d absolutely expect to see on an ’80s prom dress or the uniform of a sports expansion team from the ’90s. And the ultramarine… I don’t even know what to say. It’s a gorgeous, bright blue with purple undertones that is my favorite iPhone color of all time.

However, here’s what you’ll give up if you want to take the plunge into ultramarine: The always-on, high-refresh-rate ProMotion display; a small amount of performance due to the A18 rather than A18 Pro chip; a third, telephoto camera lens; a higher resolution ultrawide/macro camera; 120fps 4K video captures; and a titanium frame in any shade you want as long as it’s monochrome.

For my money, the killer features there are the extra camera and the display. When my iPhone Pro is charging, it’s parked in my kitchen on a magnetic stand and displays the current time, temperature, and weather forecast in StandBy mode. I’d miss that if I gave up the always-on display. And I find myself using the telephoto lens more than I expected to, especially now that the iPhone 16 Pro has the 5× zoom that last year’s Pro Max had.

Still… there’s less than I expected. Would I be perfectly happy using an iPhone 16 in ultramarine for the next few years? You know, I think I would. If you like the colors, don’t care about the screen or telephoto camera, and want to save some money—well, let’s just say it’s been a while since the iPhone and iPhone Pro product lines have been so close.

There have also been moments in the last few years when it looked like the iPhone Pro Max was slowly accelerating away from the iPhone Pro, as it became more of its own creature, one that could maybe even have been renamed iPhone Ultra. This year, though, the Pro Max comes back to the pack—it’s literally the same phone as the iPhone 16 Pro, just bigger. Maybe in the future, Apple will find ways to differentiate that model beyond size, or perhaps it will turn its attention to other designs that raise the bar on what it means to be the most expensive iPhone in existence. Either way, this year the Pro Max has nothing special to differentiate it from the Pro. If you don’t need the display size or battery life, you don’t need the bigger phone.

Happy Clickmas (War on Buttons is Over)

“Why did Steve Jobs wear a turtleneck? Because he hated buttons.”

During the Steve Jobs and Jony Ive era, minimalism was everything. The pair believed that tech gadgets were too fiddly and needed to be streamlined. So the iPhone had no keyboard, the Apple TV remote didn’t have a number pad, and the iPod had a simple scroll wheel. It was a good impulse, but as so often happens, the impulse can be taken too far and result in bad decisions like a buttonless iPod shuffle or a professional laptop without enough ports.

As a survivor of the War on Buttons, I’m happy to report that the tide has shifted, and today’s Apple has decided that buttons… are… good? Last year brought the Action Button to the iPhone 15 Pro models, and this year all models sport both the Action Button and a new Camera Control button. (Even the most enthusiastic fan of buttons might caution Apple to slow its one-new-button-a-year pace, lest the iPhone become a Blackberry by 2050.)

I love the idea of the Camera Control button. It’s literally a shutter button on the side of your iPhone, so you can pull out your phone entirely on feel and start snapping pictures. (A first press opens the Camera app, and from there on—unless your phone is locked, when you have to press to unlock first—every press takes a photo. Pressing and holding records video.) Muscle memory is a thing. Navigating a touchscreen interface can be fussy and frustrating, especially in bright light or if you’re rushing to capture an important moment.

That said, I don’t think Apple executed Camera Control as well as it should have. The basic functionality—click to shoot—works pretty well, with real button movement combined with a crunchy haptic signal. It takes a little getting used to, but not much, and I found that I’m quite capable of taking pictures with one hand when using Camera Control. (When fully zoomed in, I find that pressing Camera Control makes my iPhone shake a lot more than just tapping on the screen to take a picture; the camera system seems to compensate for this, but I still don’t like the idea that my picture could be degraded because I chose to use a physical button.)

It’s the rest of it that I have issues with. In true Magic Kingdom fashion, Apple has taken a simple shutter button and plussed it: the button is also force sensitive (so it can sense intermedia pressure that comes before a full click) and its surface is like a tiny trackpad (so it can sense finger swipes). I admire packing that much technology in a little button and can see how both of those technologies could enhance the basic camera interface.

But I think Apple’s implementation just isn’t up to the challenge. It’s overly complex, for starters. Before I deconstruct what Apple is doing, let me describe how it works by default: If you press halfway down, a menu strip pops out (there’s a cute animation, of course) on the screen right beneath the button. This strip contains a set of functions that you can switch between by swiping your finger on the button, so you can switch between cameras, zoom, or adjust exposure. To switch between different function sets, you double-squeeze (like a Mac double-click) to go up one level in the menu hierarchy, then swipe to choose a different function set, then squeeze to enter that set, at which point you can swipe through a different set of controls.

The idea here is to emulate the physical controls on real cameras, which frequently use a half-press on the shutter button to focus, and (depending on the mode) might use a dial to adjust aperture or shutter speed. It’s powerful, and I think that power users can learn to navigate it fairly well, but there’s no way that this should be the default interface for the Camera Control button. Even when you finally grasp the amount of pressure that triggers the half-press but not a full press, you then have to master doing it twice in quick succession, and now you’re swiping between different menus in a hierarchy.

I appreciate the ambition, but this is too much complexity for a default feature, and if users get frustrated by accidentally swiping out of the right mode and wrecking their photo (which is very easy to do), they may swear off Camera Control entirely. On my dedicated digital cameras, the default in Automatic mode is that a half-press lets me set a focus target (via a crosshair or an intelligent object selection) and hold that target, and the adjustment wheel does literally nothing because I’m in Automatic mode. Apple needs to go in this direction—and the saving grace of Camera Control may be that software updates can solve its biggest issues.

For my money, by default the half-press should emulate a traditional camera focus (Apple says this is coming in a future software update—why in the world was it not in the first version of this software?!) and the whole double-tap/swipe thing should just be turned off. (Obviously, it should still be optional to enable it—I’m not advocating for the removal of the feature, just for its activation by default.) I’m open to the idea of parking something very basic on the swipe control—changing cameras, or changing modes (video, portrait, etc), but there’s too much baked in and it’s just too complex.

I appreciate that Apple tried to pull out all the stops with Camera Control, but it overshot the target, which is regular people who just want to take a picture.

A peculiar side effect of the arrival of Camera Control is that the Action Button is having a bit of an existential crisis. When it debuted on the iPhone 15 Pro models last year, Apple extolled the virtues of the Action Button as… a way to take pictures with a hardware button. One year in existence, and it’s already lost its job. Tough times.

Of course, the Action Button has always been customizable, and it continues to be so. Apple’s language describing the Action Button has been adjusted accordingly, but any list of applicable features will eventually land you in the realm of writing Shortcuts. I like Shortcuts a lot, and I do think that with a little bit of help, regular people can create them, but resorting to Shortcuts to buttress the Action Button’s viability feels like a failure on Apple’s part. Not only should the Shortcuts Gallery (remember that?) be full of Action Button recommendation Shortcuts, but perhaps Apple needs to revamp its beautiful Action Button settings interface to add more functionality.

Maybe the Action Button Settings need a little more sophistication so you can choose different actions based on some basic triggers (like time, or device orientation, or location—basic stuff that can be built via Shortcut but should probably shouldn’t require that level of effort). Or maybe the solution is for Apple Intelligence to gain the ability to generate that Shortcut for you? I don’t know what the right approach is, but I do think that the Action Button needs to be made more versatile for everyone, especially now that the new button on the block has taken over its original default action.

Cameras in style

The iPhone 16 Pro features an updated version of the 48-megapixel camera introduced two years ago. The company has settled on an approach that is now inscribed in the camera’s name, dubbing it the “Fusion camera”—presumably because of the technique that bins the data from the 48-pixel sensor into a 12MP image with superior light collection, and combines it with the refined detail of a full 48MP collection, resulting in a fused 24MP image that is (ideally) the best of both worlds.

What I can report is that I think Apple’s camera game continues to be strong, aided by the spectacular 5× zoom that’s now available on the more mainstream size of iPhone Pro. The 24MP fused images from the main camera look very good. I was honestly stunned at some of the photos I shot at a concert from about eight rows back. I ended up with photos that were colorful and clear and stunningly close—and then I double-tapped on my iPad and discovered that I wasn’t even zoomed in all the way. There was even more resolution to be had. It’s really remarkable how good these cameras are.

That said, these cameras are also complicated, even beyond what I’ve already written about the new Camera Control button. There are so many different icons and modes in the Camera app these days that it can be really daunting. Do you want Action Mode, or to shoot regular video at 4k120? Do you want night mode, or to fire a flash, or just for the phone to take its best shot without either? I know there’s a lot of intelligent software making decisions in the background, but sometimes when confronted with something to shoot, I find myself spoiled for choice. Sometimes I am paralyzed by the options.

At least one innovation on these phones will help me not worry about what’s going on in the moment, because I know I can fix things later: the new iteration of Photographic Styles. When Apple introduced the original Photographic Styles a few years back, it was a revelation because Apple was allowing users to alter the decisions being made deep down in the photographic pipeline, in order to generate images that were more pleasing to the users (rather than to Apple’s own decision-makers). Great idea, but it meant that those decisions were, like Apple’s own baked-in assumptions in previous cameras, baked in to the final photos. Any future adjustments to the photos would be using the end-result pixels. You could never go back and recapture the moment, deep in the pipeline, and reprocess that image. Captures only happen once.

That’s… not quite true anymore. The new Photographic Styles feature, basically Styles 2.0, still allows users to pick their favorite styles and adjust them in ways that please them, but the results are more changeable after the fact than before. Apple is capturing a load of camera data and retaining it in the HEIF container file, meaning that you can choose to reprocess the image with a different set of styles after the fact. It’s not quite re-editing a RAW format file, but it does mean you can do things like shoot using a black-and-white style and then restore the color data if you change your mind!

I wish Photographic Styles was simpler, though. There are two different sets of styles, and then you can dial settings in by dragging your finger up and down, left and right, in a square. There’s another setting that’s a slider just below the square. I don’t know if I understand fully what those three axes actually do to the image, but at least you can watch the changes on the photo you’re editing and decide for yourself what you find aesthetically pleasing. Again, I applaud Apple for trying to find simple, intuitive ways of giving users access to extremely complex concepts… but it still feels like a lot. I’d kind of like to just dial in that I prefer my images to be a bit warmer and with a little more contrast and be done with it, you know?

Apple silicon marches on

With the iPhone 16 series, Apple’s releasing two different chips: the A18, which powers the iPhone 16 and 16 Plus, and the A18 Pro, which powers the iPhone 16 Pro and Pro Max. These are different chips, marking the first time in iPhone history that Apple has shipped two brand-new chip designs in the same set of iPhone models. Though the A16 has five GPU cores and the A16 Pro has six, these aren’t two “binned” versions of the same chip. A detailed analysis shows that they’re quite different.

While both chips are built on the second-generation 3nm build technology from Apple’s chipmaking partner, TSMC, my guess is that the A16 Pro is using the latest CPU and GPU core designs from the M4 chip series, while the regular A16 is a new budget design using some older core generations. I suspect the A16 will be repurposed in numerous forthcoming Apple products that require a base level of Apple Intelligence, from iPads to HomePods to Apple TVs.

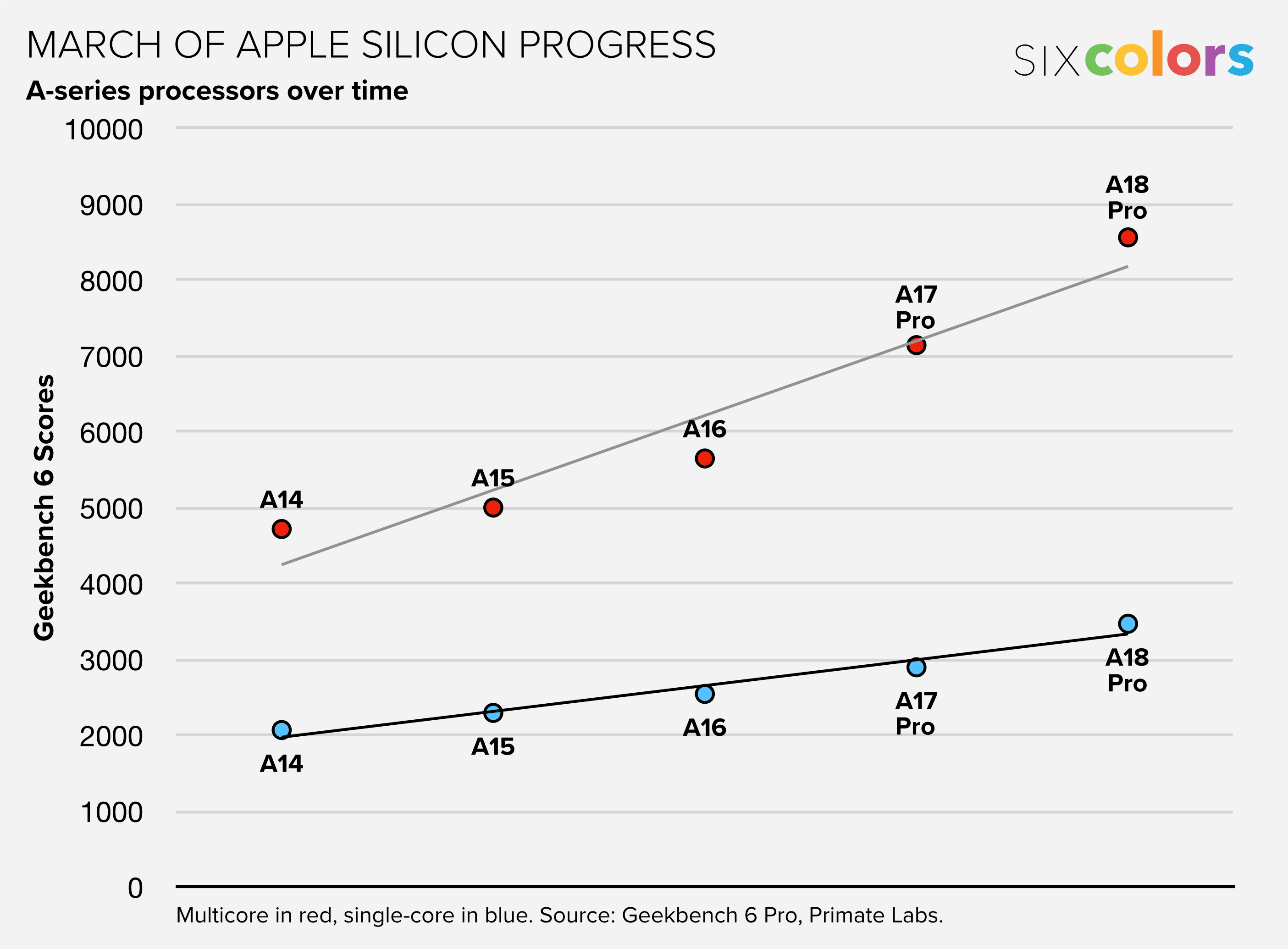

To illustrate Apple’s relentless pace forward with chip design, I’ve plotted the GeekBench scores for the last nine generations of top-level iPhone processors. Single-core performance has risen by an average of 15% per year over the last five years; this year it’s 20%. During that same five-year period, multi-core performance has improved by an average of 18%, and this year it’s 20%. Oh, and GPU performance per core? Up 20% too. The whole thing’s just… up 20% from last year’s A17 Pro chip.

I should mention a few other general improvements to the hardware in this generation. Battery life appears to be quite a bit superior to the previous generation, though of course whether that means an extra hour or 30 minutes or two hours all depends on how you use your phone. The phones also never felt hot in my hand, unlike the previous generation, showing off the fact that Apple appears to have done some thermal mitigation.

And I want to single out the first-time support for Wi-Fi 7. I tested an iPhone 16 on a symmetric gigabit connection via Wi-Fi 7 and the results were 950Mbps up and down—essentially the same speed that I got from a Mac using a wired Ethernet connection, and faster even than the Wi-Fi 6E connection I got on an M3 MacBook Air. Most people won’t have access to Wi-Fi 7 networks for a little while, but… wow. It’s very impressive.

“Ready for Apple Intelligence”

Judging by Apple’s marketing materials, the most important selling point of the iPhone 16 series is that it’s ready for Apple Intelligence. The company’s website and press kits are full of Apple Intelligence features, many (most?) of which won’t be available to customers until sometime in early 2025. As I write this story (several weeks after the release of the iPhone 16 models!), Apple Intelligence is still only available to customers who opt in to a Public Beta version of iOS 18.1.

So, how is Apple Intelligence? I couldn’t tell you. Not only would it be somewhat irresponsible to represent beta software features in a hardware review, Apple Intelligence will hardly be done when iOS 18.1 ships. In fact, it’ll only have begun. Apple plans to continue fulfilling the promise it made back in June from now until—well, June 2025.

I don’t need to go too far out on a limb to predict that some of the new Apple Intelligence features will be great, and others will be uninteresting. I do suspect that the longer it takes for a feature to reach us, the more interesting it might be. I’m particularly optimistic about the potential for the use of a device’s Semantic Index and the power of App Intents in individual apps to construct a next-generation Siri that knows everything about who I am and can do whatever I demand of it. I realize that’s a broad, unattainable goal—but progress toward that goal would be pretty great.

Still: Don’t ever buy a piece of hardware because you’re excited about a promised, future feature. Apple’s big enough and high-profile enough to have a pretty good likelihood of delivering what it’s promising, but there’s no reason to spend hundreds of dollars based on the hazy promise of a feature that’s not even in beta yet, with nobody to tell you if it really works or if it’s more hype than substance.

If you want to get excited about buying the iPhone 16 series, there are plenty of reasons. The iPhone 16 and 16 Plus come in gorgeous colors, sport an upgraded main camera, and gain Camera Control, Photographic Styles 2.0, and the Action Button. The iPhone 16 Pro gains a 5× zoom, and both the Pro and the Pro Max get Camera Control and Photographic Styles. That’s the stuff we should all be focused on right now. As for Apple Intelligence, Apple is going to have to prove its worth over the next year.

If you appreciate articles like this one, support us by becoming a Six Colors subscriber. Subscribers get access to an exclusive podcast, members-only stories, and a special community.