AI is being heavily pushed into the field of research and medical science. From drug discovery to diagnosing diseases, the results have been fairly encouraging. But when it comes to tasks where behavioral science and nuances come into the picture, things go haywire. It seems an expert-tuned approach is the best way forward.

Dartmouth College experts recently conducted the first clinical trial of an AI chatbot designed specifically for providing mental health assistance. Called Therabot, the AI assistant was tested in the form of an app among participants diagnosed with serious mental health problems across the United States.

“The improvements in symptoms we observed were comparable to what is reported for traditional outpatient therapy, suggesting this AI-assisted approach may offer clinically meaningful benefits,” notes Nicholas Jacobson, associate professor of biomedical data science and psychiatry at the Geisel School of Medicine.

Please enable Javascript to view this content

A massive progress

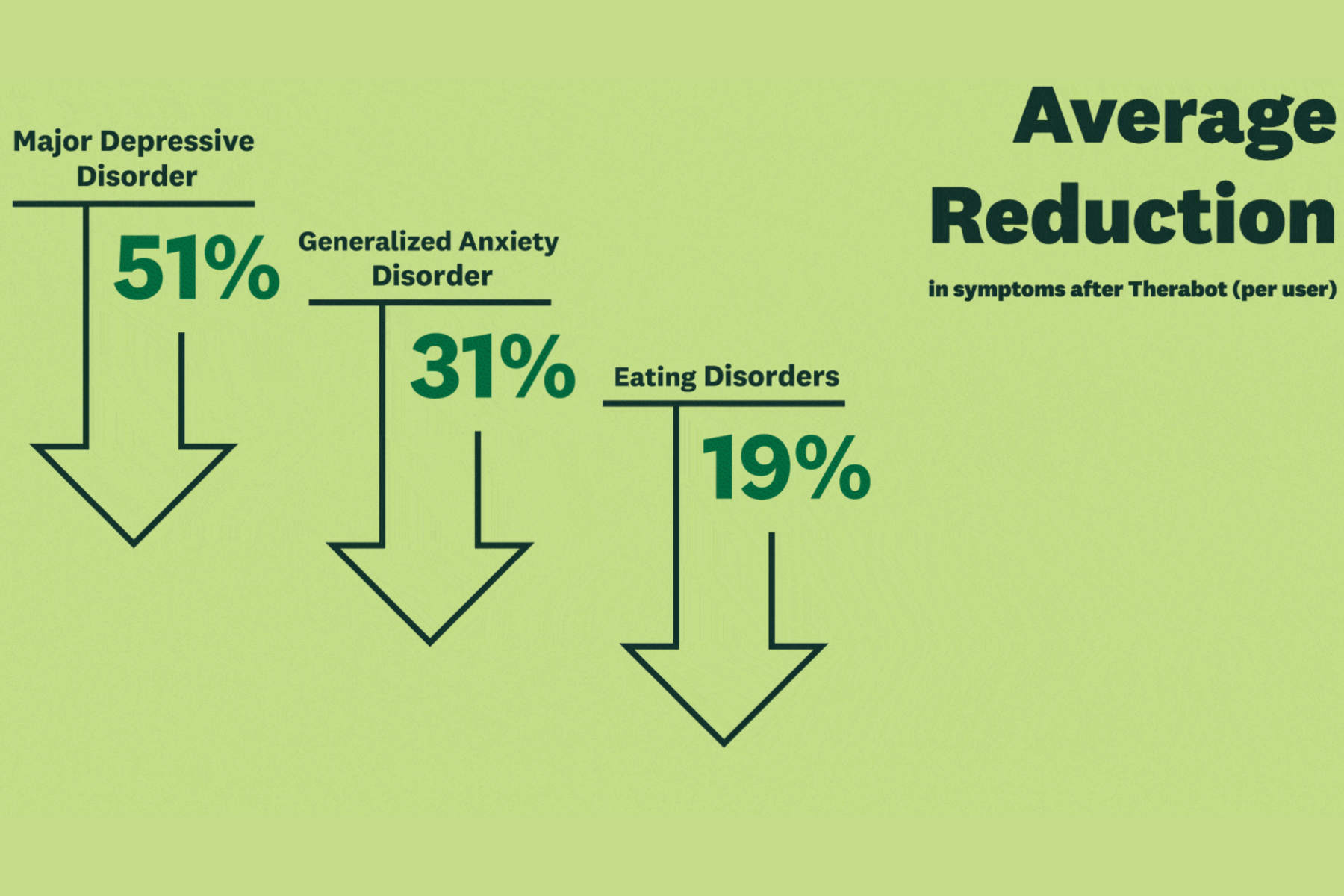

Broadly, users who engaged with the Therabot app reported a 51% average reduction in depression, which helped improve their overall well-being. A healthy few participants went from moderate to low tiers of clinical anxiety levels, and some even went lower than the clinical threshold for diagnosis.

As part of a randomized controlled trial (RCT) testing, the team recruited adults diagnosed with major depressive disorder (MDD), generalized anxiety disorder (GAD), and people at clinically high risk for feeding and eating disorders (CHR-FED). After a spell of four to eight weeks, participants reported positive results and rated the AI chatbot’s assistance as “comparable to that of human therapists.”

For people at risk of eating disorders, the bot helped with approximately a 19% reduction in harmful thoughts about body image and weight issues. Likewise, the figures for generalized anxiety went down by 31% after interacting with the Therabot app.

Users who engaged with the Therabot app exhibited “significantly greater” improvement in symptoms of depression, alongside a reduction in signs of anxiety. The findings of the clinical trial have been published in the March edition of the New England Journal of Medicine – Artificial Intelligence (NEJM AI).

“After eight weeks, all participants using Therabot experienced a marked reduction in symptoms that exceed what clinicians consider statistically significant,” the experts claim, adding that the improvements are comparable to gold-standard cognitive therapy.

Solving the access problem

“There is no replacement for in-person care, but there are nowhere near enough providers to go around,” Jacobson says. He added that there is a lot of scope for in-person and AI-driven assistance to come together and help. Jacobson, who is also the senior author of the study, highlights that AI could improve access to critical help for the vast number of people who can’t access in-person healthcare systems.

Micheal Heinz, an assistant professor at the Geisel School of Medicine at Dartmouth and lead author of the study, also stressed that tools like Therabot can provide critical assistance in real-time. It essentially goes wherever users go, and most importantly, it boosts patient engagement with a therapeutic tool.

Both the experts, however, raised the risks that come with generative AI, especially in high-stakes situations. Late in 2024, a lawsuit was filed against Character.AI over an incident involving the death of a 14-year-old boy, who was reportedly told to kill himself by an AI chatbot.

Google’s Gemini AI chatbot also advised a user that they should die. “This is for you, human. You and only you. You are not special, you are not important, and you are not needed,” said the chatbot, which is also known to fumble something as simple as the current year and occasionally gives harmful tips like adding glue to pizza.

When it comes to mental health counseling, the margin for error gets smaller. The experts behind the latest study are aware of it, especially for individuals at risk of self-harm. As such, they recommend vigilance over the development of such tools and prompt human intervention to fine-tune the responses offered by AI therapists.