Rita El Khoury / Android Authority

TL;DR

- A leak from Google’s gChips division has revealed new AI tricks and software-based camera features for the Pixel 10 and Pixel 11.

- Potential camera improvements include support for 4K 60fps HDR video, 100x zoom, an upgrade to the Cinematic Blur feature, and a new “Ultra Low Light” video mode for capturing quality footage in very dim lighting.

- The upcoming Pixel devices may also introduce ML-based always-on features related to health monitoring and activity tracking.

The thing that usually leaks last about Pixel devices is the software features. While every detail of the hardware typically leaks months in advance, we usually only learn about the new software right before the launch.

This is not the case today, as thanks to a massive leak from Google’s gChips division, Android Authority has viewed credible documents that hint at a few new features we can expect from the Pixel 10 and Pixel 11.

Even more AI

C. Scott Brown / Android Authority

The big thing Google phones currently focus on is AI features, and it’s no different in the case of the upcoming Pixel phones.

Thanks to its improved TPU, Tensor G5 (expected to make its debut in the Pixel 10 series and newer), will be getting “Video Generative ML” features. While that’s a pretty vague name, learning the intended use case explains what the feature will do a bit better: “Post-capture Generative AI-based Intuitive Video Editing for the Photos app.” We can only guess what this means exactly, but it sounds like Google will let users edit their videos more easily by using AI that actually understands the video. The feature might also be available in the YouTube app, specifically for YouTube Shorts.

Pixel 11 may also offer 100x zoom capabilities

Google is also exploring several AI features for photo editing, including “Speak-to-Tweak,” which seems to be an LLM-based editing tool, and “Sketch-to-Image,” which is self-explanatory and resembles a similar feature offered by Samsung’s Galaxy AI. Additionally, there is prior evidence indicating that Google is developing a Sketch-to-Image feature for its Gemini project.

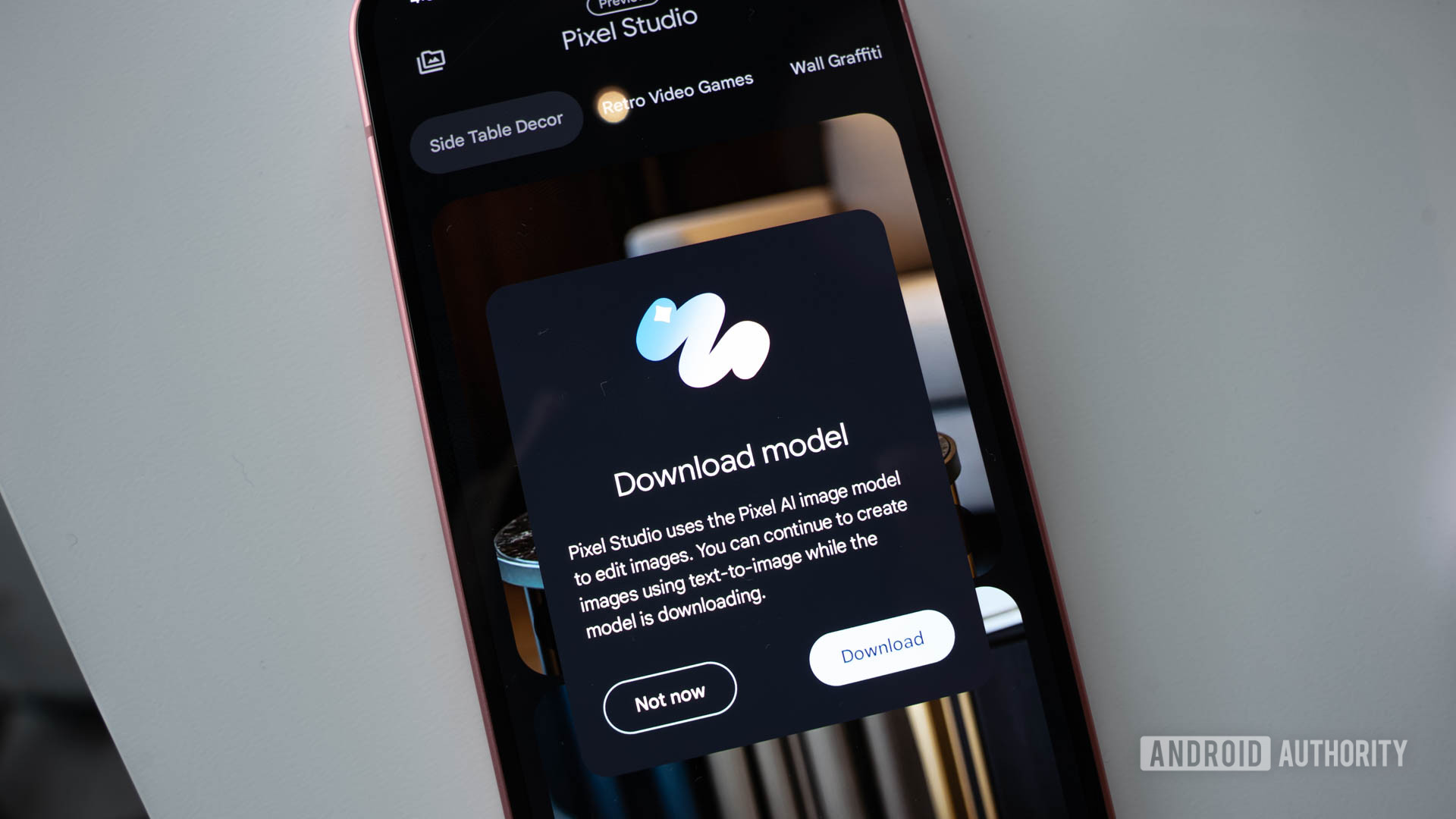

Google is also working on a “Magic Mirror” feature, but unfortunately, no extra context could be gathered from the leaked documents. Additionally, the Tensor G5 should also be capable of running Stable Diffusion-based models locally, which could be used in the Pixel Studio app, instead of the current server-based solution.

Promising camera improvements

C. Scott Brown / Android Authority

Google focuses heavily on the cameras of its phones, so it’s not surprising that many of the new features are related to this area. Firstly, Tensor G5 finally supports 4K 60fps HDR video, whereas previous models only allowed up to 4K 30fps video.

The Pixel 11 may also offer 100x zoom capabilities through Machine Learning for both photos and videos. However, we anticipate that the algorithms used for each will differ, so don’t expect identical quality across formats. Additionally, the documents reference a “next-gen” telephoto camera designed to enhance this feature, suggesting that significant hardware upgrades could be on the way as well.

Cinematic Blur is getting an upgrade on the Pixel 11, too, with support for 4K 30fps and a new “video relight” feature that seems to change lighting conditions in videos. Both of these new features are made possible by a “Cinematic Rendering Engine” in the chip’s image signal processor. The inclusion of the new hardware block also reduces the power draw of video recording with blur by almost 40%.

Last, and perhaps the most exciting feature potentially coming to the Pixel 11 is “Ultra Low Light’ video, also referred to as “Night Sight video.” A feature of this name already exists, but it relies on processing in the cloud, whereas this one is fully on-device. Google specifically mentioned 5-10 lux are the intended lighting conditions for the feature. This is considered very low light, similar to the illumination provided by a dimly lit room or the light from a cloudy sky at dusk; for example, it’s comparable to the brightness of a candle placed about one meter away. The new feature will also rely on new camera hardware to achieve the intended results.

New ambient features

Thanks to the inclusion of a new “nanoTPU” in the low-power part of the Tensor G6, Google is planning on adding a few ML-based always-on features to the Pixel phones. A good chunk of these are health-related features, such as detection of “agonal breathing, cough, snore, sneeze, and sleep apnea, fall detection, gait analysis and sleep stages monitoring.” There’s also emergency sound event detection.

Some of the features are also for activity tracking, like “Running ML,” which is a collection of tools for runners, such as “coachable pace” and “balance & oscillation” analysis.

Pixel 11 might also bring support for more Quick phrases, but unfortunately, no list was given.

Which potential new features are you most excited about? Let us know in the comments below!