Apple is perceived as a laggard in the AI race — despite that, Apple has developed the single best computer for AI research. The new Mac Studio with an M3 Ultra chip, which supports up to 512 GB of unified memory, is the easiest and cheapest way to run powerful, cutting-edge LLMs on your own hardware.

The latest DeepSeek v3 model, which sent shockwaves through the AI space for its comparable performance to ChatGPT, can run entirely on a single Mac, Apple AI researchers revealed on Monday.

It’s a machine that fits comfortably on your desk, rather than a server farm; it costs the same as a used Honda Civic, not a new Lamborghini.

How did this happen? Most remarkably of all, by sheer coincidence. Here’s why the Apple silicon architecture makes for the best AI hardware — a use case Apple didn’t mean to design it for.

Massive AI models running on Mac Studio

Photo: Nana Dua/Pexels

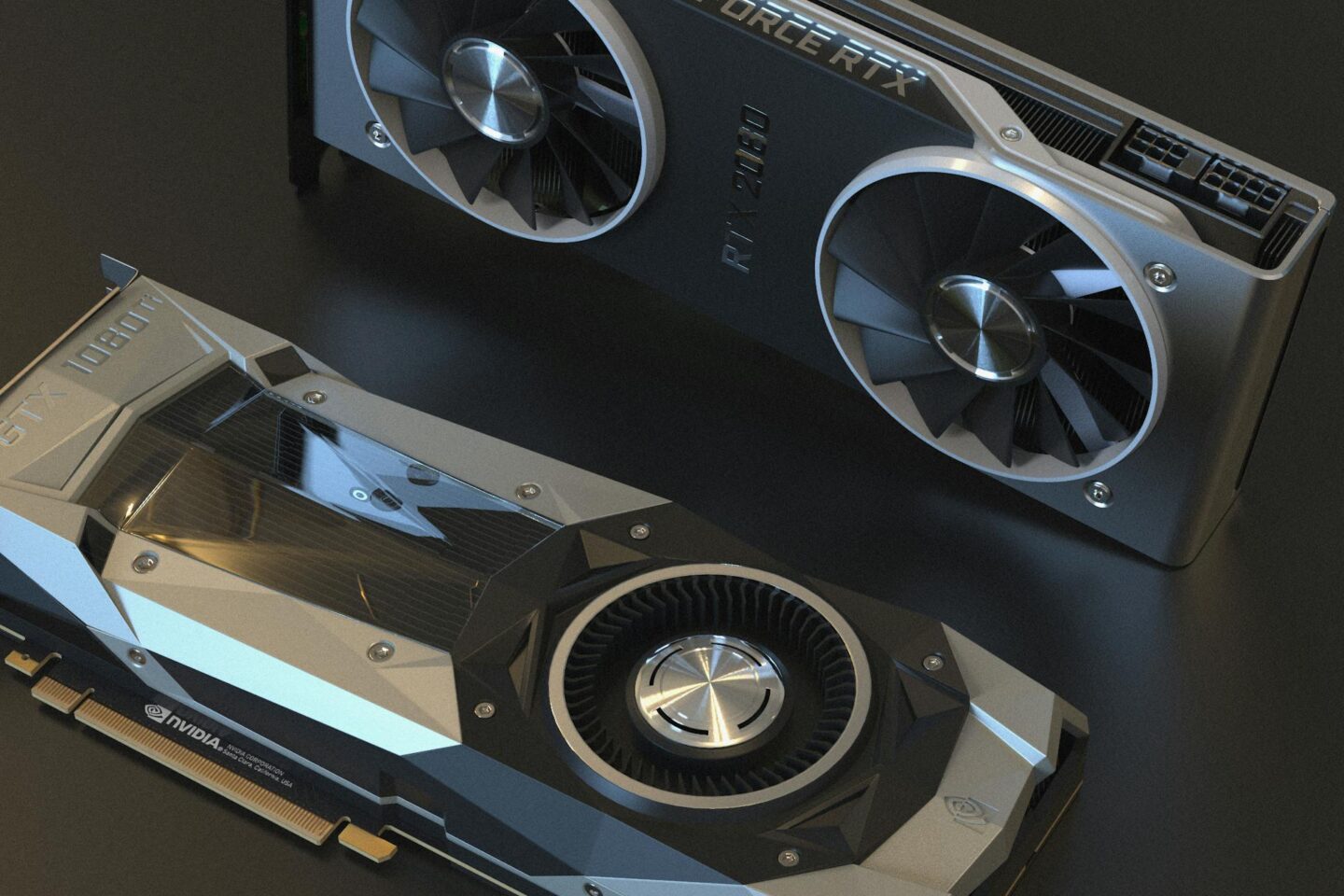

AI models are largely powered by GPUs, the part of a computer dedicated to graphics. Just like drawing 3D models or rendering videos — other GPU-centric tasks — LLMs (large-language models) need a lot of parallel processing for multiplying millions of matrices as fast as possible. GPUs are explicitly designed for that particular kind of math.

But unlike 3D games and video rendering, LLMs and other AI models require much more memory. The Nvidia 4090, a high-end consumer GPU that a PC gamer might put in their custom rig, has 24 GB of memory. DeepSeek R1, an LLM notable for its industry-leading efficiency, however, still requires over 64× as much memory.

There’s a huge advantage to running these models locally. You don’t have to pay any money using a cloud-based AI service; the only cost is the price of the machine and its electricity. There isn’t any network latency, usage limits or security concerns that you get working across the internet on someone else’s server.

Apple’s unified memory architecture is unexpectedly great for this

Photo: iFixit

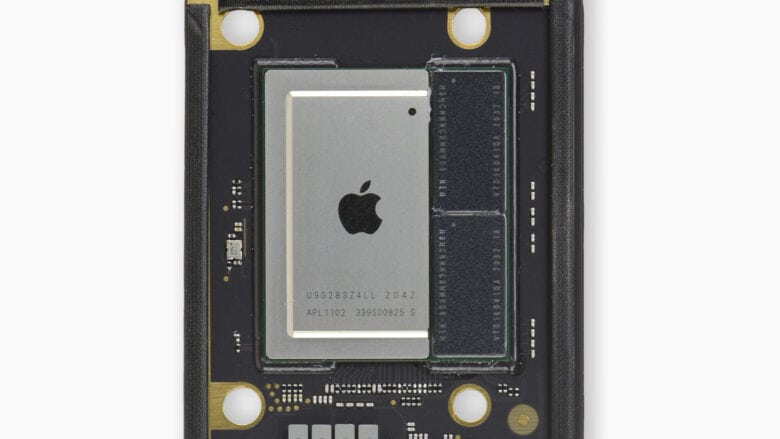

Apple silicon is very different from a traditional PC. The CPU, GPU and NPU (Neural Processing Unit, which Apple calls the neural engine) are all fabricated on the same die, in the same chip. All three share the same memory, rather than discrete memory for computing and graphics.

For gaming, the fact that users can’t add a bigger GPU than what Apple fabricates into the chip is a downside. You can’t buy their highest-end GPU without also buying their highest-end CPU, paying the Tim Cook tax for bunch of extra memory and SSD storage.

But for machine learning and AI research, Apple silicon gives you the most memory per dollar by a long shot. The cheapest $599 M4 Mac mini comes with 16 GB. An Nvidia 4060-based graphics card with similar amounts of memory might cost as much — before you buy the rest of the computer.

The M3 Ultra Mac Studio with 512 GB of unified memory might cost a pretty penny at $9,499, but you can run the latest DeepSeek v3 model entirely on-device. That amount of memory is only otherwise available on enterprise-grade server farm hardware that’s “make a phone call to get a quote”-expensive.

The community sharing Mac-optimized local models

On Monday, in a social media post, Apple machine learning researcher Awni Hannun revealed he was running a new large language model from Chinese AI startup DeepSeek on a M3 Ultra Mac Studio with 512GB of unified memory.

“The new Deep Seek V3 0324 in 4-bit runs at > 20 toks/sec on a 512GB M3 Ultra with mlx-lm!” he wrote. MLX-LM is software for running large language models on Apple silicon.

The new model, named DeepSeek-V3-0324, was released quietly by DeepSeek as an open source project on Hugging Face, an online AI repository. It weighs in at 641GB and boasts 670 billion parameters, and is reportedly comparable to the latest generative AIs from giants like OpenAI or Anthropic, whose models require giant data centers to run on.

But Hugging Face community members already have DeepSeek-V3-0324 up and running on a $9,499 Mac Studio. And if you connect three of these Mac Studios together using Thunderbolt 5 — as startup Exo Labs has done — you can run the full DeepSeek R1 model with a longer context.

These breakthroughs in efficient local performance significantly undermine the venture capital-funded companies that have dropped billions on massive data centers full of pricey Nvidia chips.

This was all a happy little accident

Photo: Apple

Did Apple mean to make the best AI computer in the world when designing the Apple silicon architecture? Certainly not.

Apple had been designing in-house processors for use in iPhones and iPads starting in 2008, with the first A4 chip debuting in 2010. Rumors of a Mac powered by Apple silicon began circulating in earnest in the mid-2010s.

This was many years before the explosion of large language models took the world by a storm, and an embarrassingly longer period of time before Apple executives started paying attention.

Apple’s pitch for the benefits of the unified memory architecture, from the press release of the M1 chip, was all about how it “allows all of the technologies in the SoC to access the same data without copying it between multiple pools of memory.” It was all about giving more power and battery life to the MacBook Air.

The next time Tim Cook ends a keynote with one of his signature turns of phrase, “We can’t wait to see what you do with it” — know that sometimes, he really means it.