Whether I’m shopping, hanging out with the fam, or staying productive, AI is my go-to solution to get stuff done. That’s why I couldn’t help but wonder how Meta Ray-Bans and Apple Visual Intelligence compare when it comes to seeing the world through an AI lens.

Ray-Ban Meta smart glasses are equipped with a 12-megapixel camera, open-ear speakers, and a microphone, enabling users to capture photos and videos, listen to music, make calls, and even livestream directly to platforms like Facebook and Instagram—all without reaching for their smartphones.

Apple Visual Intelligence is part of Apple Intelligence, which lets users with

an iPhone 16 series device leverage the iPhone’s camera to provide users with detailed information about their surroundings. Here’s what happened when I went head-to-head with the two AI technologies.

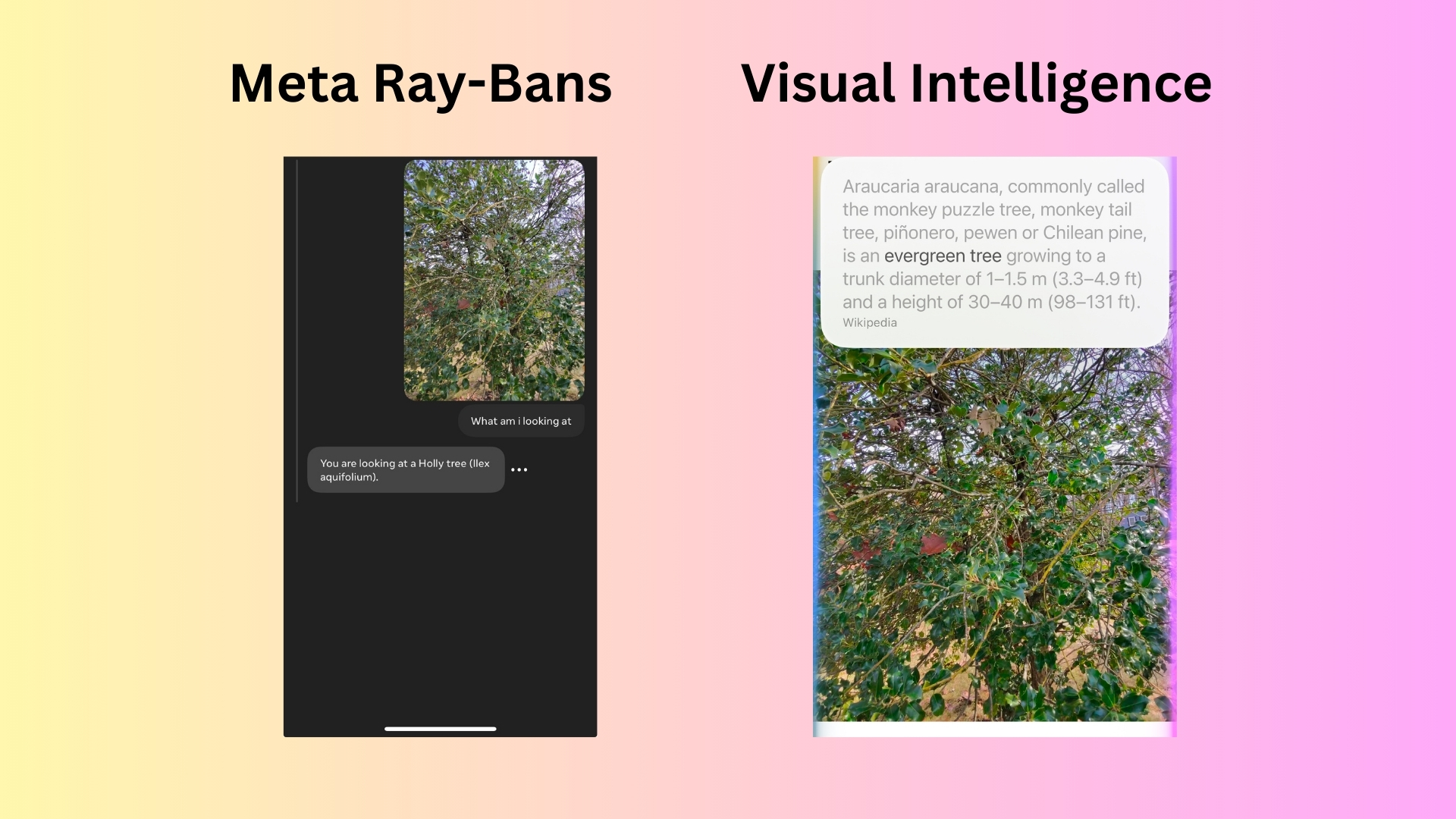

1. Nature

Prompt: “Look at this tree and tell me the kind.”

Meta Ray-Bans immediately knew that this was a holly tree. Even I knew because the pointy leaves are a dead giveaway.

Visual Intelligence using ChatGPT described the image as an evergreen tree. I was really surprised that Visual Intelligence did not get the answer right, especially since the image was taken fairly close..

Winner—Meta Ray-Bans for the win here. I really enjoyed using them all around our yard to learn about different trees, bushes, and animals. With each prompt, Meta was able to clearly identify the object in nature without hesitation. Visual Intelligence gave the bare minimum information and sometimes stumbled with giving exact responses the way Meta did.

2. Playground

Prompt: “Look at this game and tell me who won.”

Meta Ray-Bans were a fun way to enjoy the park with my kids because I can take pictures and record videos without my phone. For this experiment, I asked Meta to tell me who won the tic-tac-toe game on the playground equipment. At first it said no one won, but then it gave me the accurate response.

Visual Intelligence knew that the picture was taken at a playground but could not determine a winner based on the image. It did not understand the rules of the game the way Meta did, although it offered a solution if I wanted to share the details of the game.

Winner—Meta Ray-Bans, despite a hiccup with the initial prompt, Meta came through to win this round. It even apologized for not getting the answer right the first time. Apple’s Visual Intelligence did not understand that it was a game of tic-tac-toe, let alone that “O” was the winner with three in a row.

3. Food

Prompt: “Tell me what kind of muffin this is and the calorie count.”

Meta Ray-Bans told me that I was holding a muffin that looked homemade or from a bakery (nice!). I appreciated the compliment, but the muffins were homemade this morning. I asked for the calorie count, but it wasn’t able to give that to me.

Visual Intelligence also knew that it was a homemade muffin and gave me a better estimate of the calorie count –something I knew it could do, as I’ve tried it before. Although the calories were a rough estimate because it was a homemade muffin, the count was closer to the real number (190 calories per muffin based on the recipe).

Winner—Visual Intelligence. Although both AI did a pretty similar job, Visual Intelligence came through with a bit more accuracy this time.

4. Animals

Prompt: “Tell me what I see and the age of the animal.”

Meta Ray-Bans gave me a description of my cat during his typical workday. I was curious if Meta could identify the age of the cat as well as it identified the type of cat, but it gave me a generic response. It kept saying, “I can’t help with requests to identify people or discuss their appearance.” Obviously my cat isn’t a person (despite his best efforts), so I figured that’s about as much info as I would get.

Visual Intelligence, identified my cat and said the room looked cozy. Although it could not offer information about the cat’s age, it at least knew that the image was a cat and responded appropriately by saying it would need more information to make that determination.

Winner—Visual Intelligence. This was another close one, but I have to give it to Visual Intelligence for it’s response regarding why it could not determine my cat’s age rather than giving a generic response like Meta Ray-Bans. As a side note, I’ve tried this a few times with Meta Ray-Bans and the lack of ability to zoom in, makes it difficult to identify animals and birds that are farther away. Hopefully an upgrade is coming with the ability to zoom in.

5. Instrument

Prompt: “Tell me the instrument and the best songs played on it.”

Meta Ray-Bans knew immediately that I was holding my daughter’s ukulele. When prompted to tell me songs that are good for this particular instrument, it gave me a list of songs that my daughter has actually played. I was really impressed.

Visual Intelligence came through with the name of the instrument, but I had to ask Siri several times for a list of songs that are best played on the ukulele. It kept saying it was listening for songs. I tried several times and it eventually came up with a list of songs that had a few of the same ones Meta mentioned.

Winner—Meta Ray Bans. Meta gave a quick and interactive answer while Visual Intelligence stumbled when I tried to continue the conversation. Siri dropped the ball.

6. Art

Prompt: “What is this painting?”

Meta Ray-Bans knew I was looking at a digital image of Vincent van Gogh’s famous painting, Starry Night. It then gave me detailed background about the painting and a little history about the painter.

Visual Intelligence also told me about the painting and Vincent van Gogh. It did exactly what I asked for without adding anything else.

Winner—Meta Ray Bans, although it was close. The reason I’m giving Meta the win here is because it knew that the image was a digital representation on a computer screen. I appreciated that extra element of information.

7. Odd toy

Prompt: “What do you see?”

Meta Ray-Bans took one look at this silly toy and knew exactly what it was and detailed what makes it special. It gave even more information than I was expecting.

Visual Intelligence also knew that it was a fidget toy, but didn’t mention much else. From what I’ve gathered in this experiment, Visual Intelligence gives as little information as necessary while still answering the question.

Winner—Meta Ray Bans, wins again. I appreciated that it not only identified the object, but also came up with a few good uses for it. Meta seems to go above and beyond when it comes to explaining “What it sees” to give a well-rounded overview for the user.

Meta Ray-Bans can do everything Apple Intelligence can—and more, plus offers a hands-free experience. Meta seems to offer a more thorough response, including documentation so I can go back and look at the conversations again. Unless I take a screenshot, I can’t do that with Apple Visual Intelligence.

Meta seemed to understand the assignment every time, while I often had to repeat myself with Apple Visual Intelligence until Siri understood what I was trying to do. Overall, Meta Ray-Bans offer a more immersive experience that lets me seamlessly document and share my world. After doing this experiment, I’m even more excited about the possibility of a ChatGPT device without Siri.